Today, students around the nation use artificial intelligence to write essays, generate ideas for projects, write emails and more. Artificial intelligence has been an easy adaptation for some, and harder for others. The emergence of artificial intelligence in academia has opened up a whole new world for students and faculty, along with endless excitement, fear and confusion.

Questions arise in academic spaces when some faculty and students refuse to embrace new technology. There is a duality to the emergence of AI technologies, being perceived as both a threat and an opportunity. It is already making its mark on the business world, expecting to create 12 million jobs more than it is projected to replace, such as AI Security Engineering and AI ethicist, according to CompTIA. A growing technology, AI inevitably has found its way into higher education.

Students may have been using AI more frequently than they realize, including those who indulge in the free Grammarly subscription provided by Iowa State, a textual and writing tool that uses AI technology.

However, not everyone in higher education embraces these technological advancements, and some professors fear that the technology will hinder the students’ desire and ability to learn.

In a 2023 survey distributed by the Harvard Crimson, 47% of surveyed Harvard faculty felt artificial intelligence will negatively impact higher education.

But AI is here to stay, and eventually, everyone will need to embrace it, said Abram Anders, associate professor of English and an advocate for the use of artificial intelligence in higher education.

“I’m a little scared on behalf of students and faculty that suspicion about students and worries about them using these tools to cheat can create a negative and adversarial relationship,” Anders said. “That won’t really help anyone learn well. I think we get through that by learning how to design our learning better.”

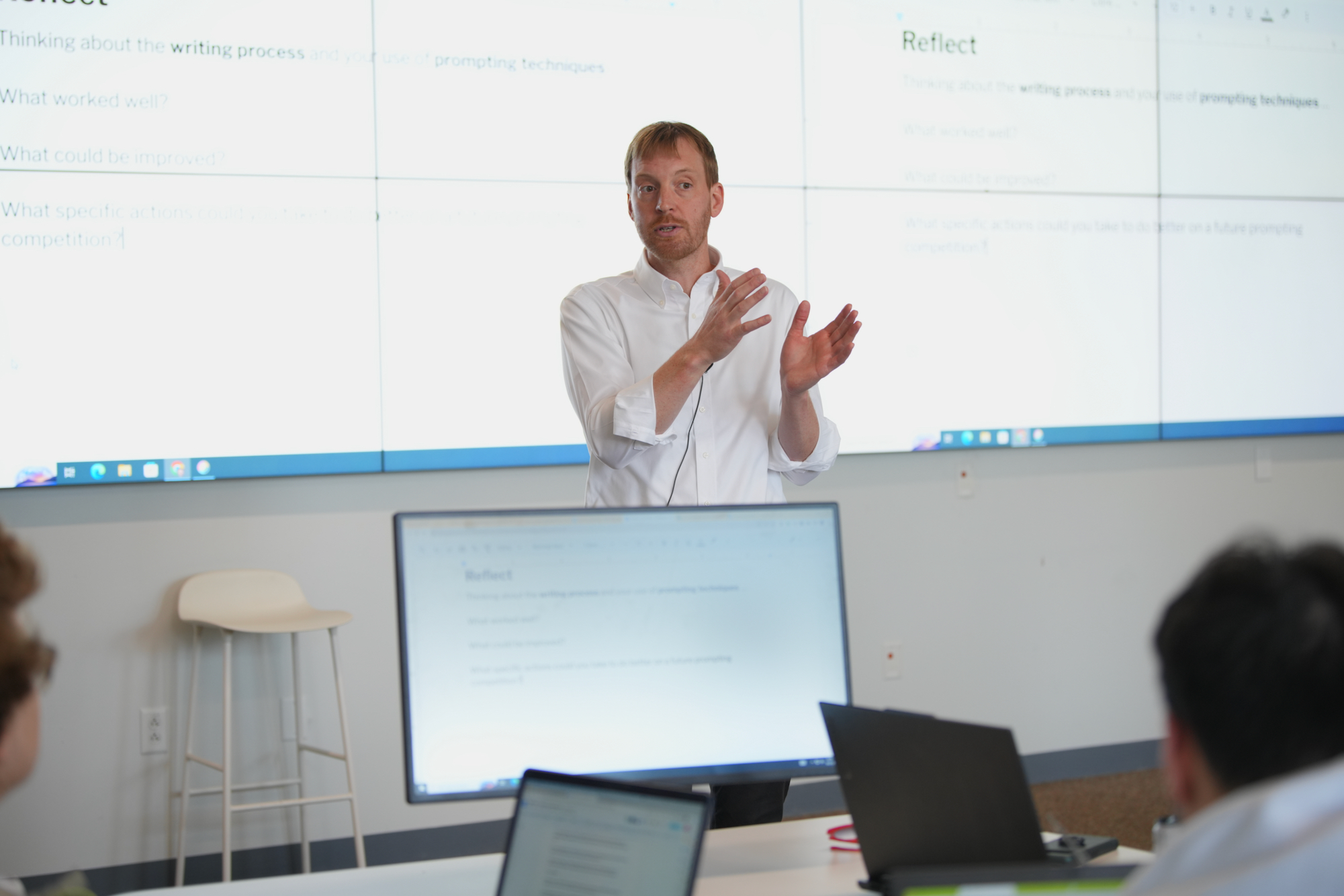

Anders has embraced AI since its emergence in academia within the last few years, but he acknowledges and understands the fears surrounding the technology. Anders and several other faculty members have spoken in the AI in Teaching Series organized by Iowa State’s Center for Excellence in Learning and Teaching (CELT). In speaking to faculty, they hope to encourage educators to learn and adapt alongside their students. One of the ways Anders does so is by teaching his students how to write effective AI prompts.

Carrie Ann Johnson, interim coordinator of research and outreach for the Carrie Chapman Catt Center for Women and Politics, said she uses AI for everything, from idea generation to starting to write a press release, and she hopes the rest of her peers will get on board soon.

Johnson compared AI to how trivial email technology once seemed.

“Back when I started college in 1993, email was just becoming a thing,” Johnson said. “I remember having to send a professor three emails for the semester and feeling silly, and I thought, ‘Who even is going to use this?’ But then I started to realize that if I can’t keep up, I’ll fall behind.”

Christine Denison, an associate professor of accounting, has been involved with the use of AI in education since January 2023 and has since spoken at a number of AI in Teaching events. She said the biggest thing faculty can do is be clear with students, especially since it’s new to everyone.

“The biggest challenge that I’ve seen from a faculty perspective is different faculty members have different levels of expertise with AI, and they’re kind of all over the map with what they expect students to do with it,” said Denison. “It’s hard to come up with a policy because different people want to use it in different ways; we need to be more consistent about communicating to students what our expectations are.”

Denison said she firmly believes that every faculty member should have a statement regarding the use of AI included in their class syllabus. CELT also advises educators to be transparent and open about AI and their expectations by having a section on their website dedicated to potential syllabus and assignment statements.

Not only can professors adapt their expectations and rules to AI technology, but there are ways they can adjust their teaching styles to encourage productive and ethical AI use. Most of the students will use AI in the real world after graduation, Denison said, so it’s unrealistic to ban the use of such technologies in an institution meant for preparing students for the future.

“The higher-level thinking where you’re bringing creativity and analysis and communication in, all of those are areas where AI could be useful. Those higher-level skills are where we would be doing students a disservice if we didn’t try to use it and learn about it with the students,” Denison said.

In writing classes, students can be especially tempted to have AI complete assignments. Anders advises professors to create assignments that require deeper engagement with the material so students can build foundational knowledge and curb the temptation to abuse AI capabilities.

“One of the things we do as educators a lot is called process pedagogy: We ask students to do small chunks of a project so that it’s less intimidating so that we can focus on each step at a time, take some research notes, draft some paragraphs,” Anders said. “If you’re doing that process-based approach where you’re really breaking it down and getting feedback on each step, then the AI isn’t as big of a threat.”

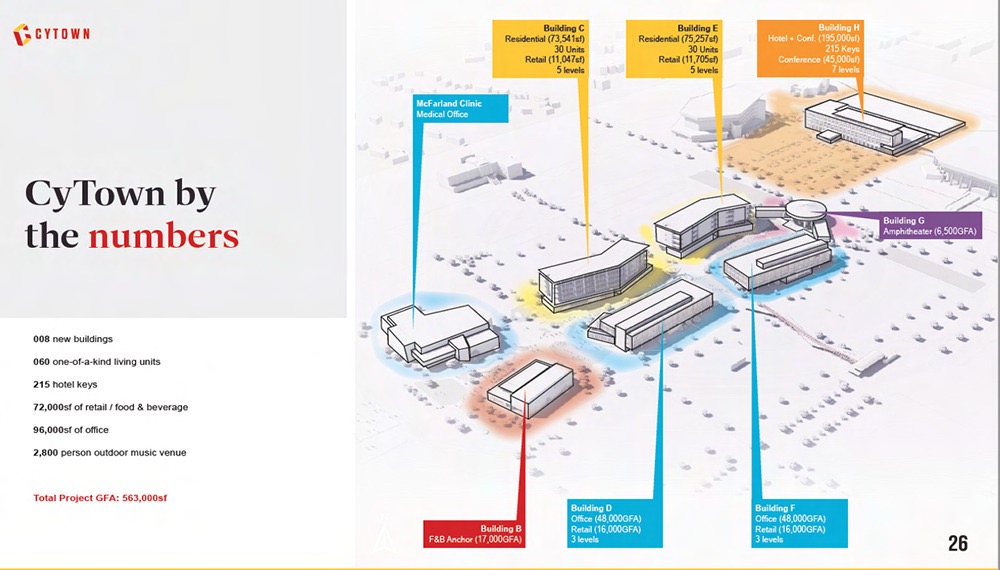

Iowa State has adapted to the growth of AI in several ways. In 2021, the university introduced a master’s of science in artificial intelligence. This development is unsurprising given that between July 2021 and July 2023, the number of LinkedIn job postings mentioning AI multiplied by 2.2, according to CNBC.

Samuel Fanijo, a student in the artificial intelligence master of science program, expressed in an email interview the need for educators to adapt. He worries that if students aren’t taught how to use AI properly, they will rely on AI tools rather than learn to collaborate with them, hindering their learning outcomes.

“Educators have a responsibility to embrace AI as it can enhance teaching and learning,” Fanijo stated. “They can adapt by undergoing AI training, integrating AI tools into teaching and fostering interdisciplinary collaboration to harness AI’s potential in research and education.”

In addition to the master’s program, the university is starting to offer classes incorporating AI for students at the undergraduate level.

Anders teaches an experimental course called English 222X: Artificial Intelligence and Writing. Some faculty were scared AI meant the end of the college essay, but Anders counters that argument, saying the class shows students how to work with AI to enhance their writing skills.

Anders said he encourages his students to view AI as a tool for enhancing creativity rather than a tool to simply do their writing for them, guiding them through ethical AI practices such as idea generation.

“A lot of us as professional writers have a team that we work with,” Anders said. “What’s cool about AI is it’s like this workmate who never gets tired of hearing your ideas, and it doesn’t always have the right idea, just like any human you might talk to. Once you can be more sophisticated and confident in your own abilities and you’re using these tools, then you can begin to treat it like a talented colleague who isn’t always right.”

Artificial intelligence is still evolving, and according to Johnson, members of higher education can embrace the emergence of such powerful technology and do their best not to let fear of the unknown get in the way. Both Denison and Anders said they hope faculty are doing their best to educate themselves and approach the issue with an open mind and ethics. Faculty can not adapt accordingly if they refuse to understand new technology, leaving students just as confused and misguided as their mentors.

“We’re still trying to find out what ethical use means,” Denison said. “To me right now, it’s being transparent…you have to be familiar with what AI does for what you’re teaching in order to tell if students are not using it ethically.”